AI for Healthcare Projects

Improving Palliative Care with Deep Learning

Anand Avati, Kenneth Jung, Stephanie Harman, Lance Downing, Andrew Ng, Nigam Shah

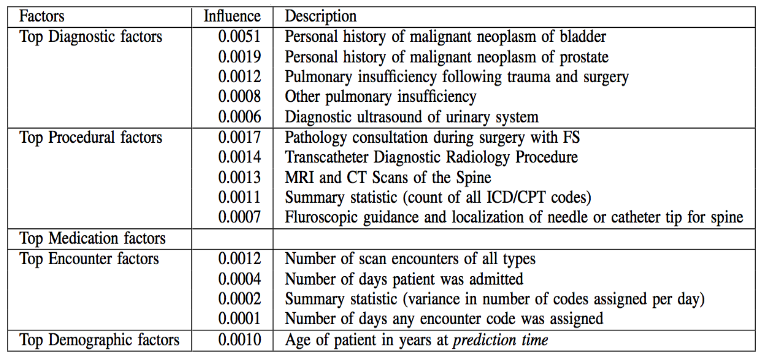

We developed a deep learning approach which uses electronic healthcare data to identify hospitalized patients who might have palliative needs. Our approach generates a report, using careful ablation techniques, highlighting the most crucial factors in the patient's EHR data that contributed towards making a high probability decision. A later version of the model has been deployed in the Stanford Medical Center and has improved care of over 2000 patients.

Paper Webpage Media