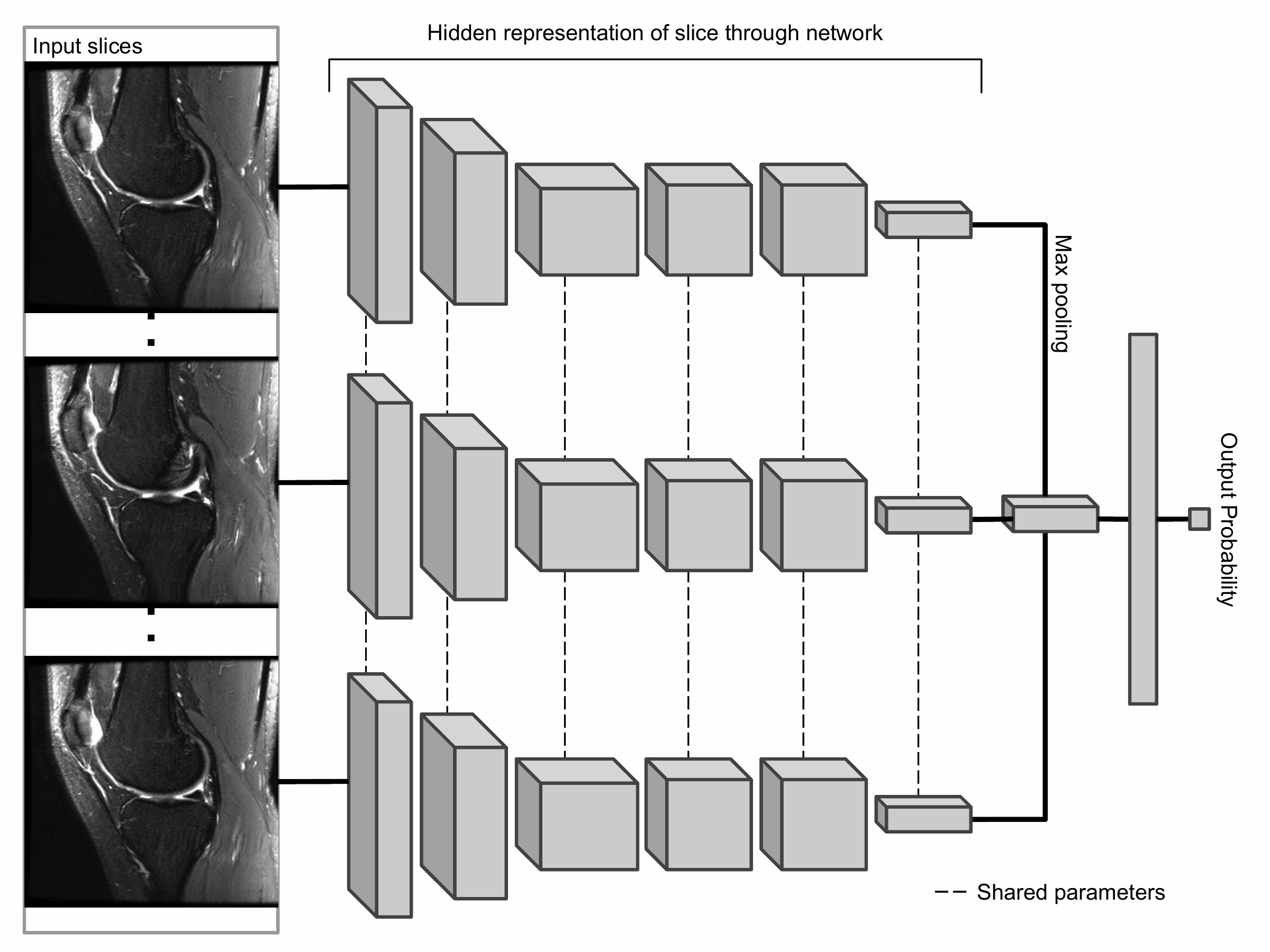

The primary building block of our prediction system is MRNet, a convolutional neural network (CNN) mapping a 3-dimensional MRI series to a probability.

The input to MRNet has dimensions s × 3 × 256 × 256, where s is the number of images in the MRI series (3 is the number of color channels). First, each 2-dimensional MRI image slice is passed through a feature extractor to obtain a s × 256 × 7 × 7 tensor containing features for each slice. A global average pooling layer is then applied to reduce these features to s × 256. We then applied max pooling across slices to obtain a 256-dimensional vector, which is passed to a fully connected layer to obtain a prediction probability.

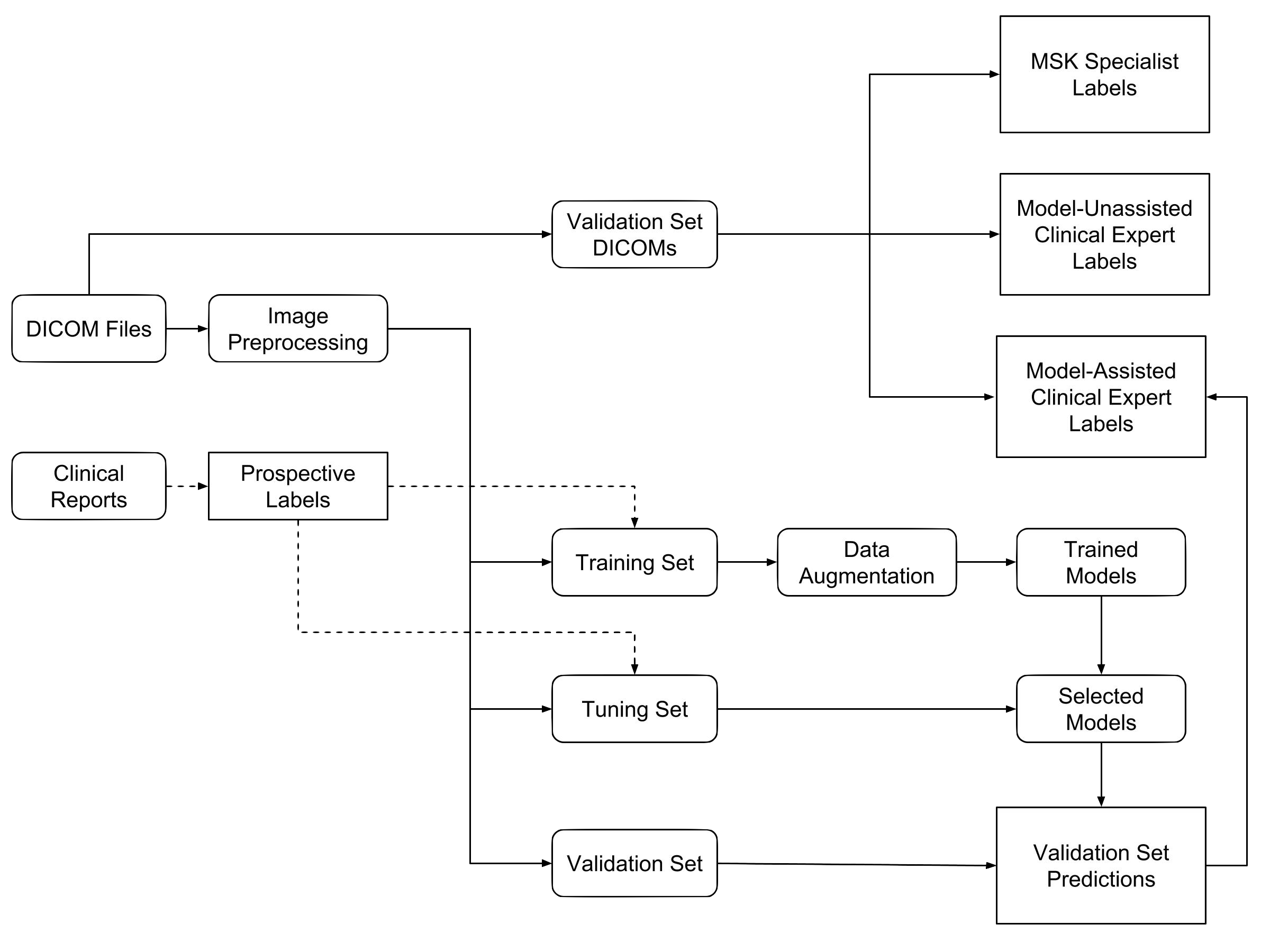

Because MRNet generates a prediction for each of the sagittal T2, coronal T1, and axial PD series, we train a logistic regression to weight the predictions from the 3 series and generate a single output for each exam.